This repository contains instructions and resources for helping you setup a complete end-to-end solution showcasing integration of LoRaWAN sensors into a digital twin solution.

Note: the instructions assume you are using The Things Stack as your LoRaWAN Network Server, but they can easily be adapted to work for other network servers (or any other source of sensor data really).

Introduction

In this tutorial, we will be setting up an end-to-end IoT solution for monitoring and visualizing in real-time the condition of a building equipped with wireless, low-power, LoRaWAN sensors.

We will be connecting off-the-shelf temperature/humidity sensors to a private LoRaWAN network server hosted on Microsoft Azure, and interfaced with an Azure Digital Twins environment. Using IoT Plug-and-Play, telemetry being sent by the sensors will automatically be reflected in their digital twin counterpart, and those twins will be used to drive a real-time 3D representation of the building in Blender.

Pre-requisites

Software

- Blender 2.91 (or later) — Blender is a free and open-source 3D modeling and rendering tool. You can download it from the project website.

- An Azure Subscription — as you will be deploying several services in the Microsoft Azure cloud, you will need an Azure subscription. You can register for a free account here.

- (optionally) Visual Studio Code — while not mandatory, having a local install of Visual Studio Code will be useful. Alternatively, you may want to simply open this repository in GitHub CodeSpaces.

Hardware

- A LoRaWAN gateway. This tutorial has been tested with the Dragino LPS8 Indoor LoRaWAN Gateway, but virtually any LoRaWAN gateway should work.

- Dragino LHT-65 temperature sensors.

| Dragino LPS8 Indoor Gateway | Dragino LHT-65 Temperature Sensor |

|---|---|

|

|

Structure of the repository

As you may be interested in starting by directly exploring the various bits and code snippets that make up the end-to-end solution, below is a quick overview of the different resources contained in this repository:

lorawan-adt-demo

├─> blender-integration # Blender resources (scene, Python scripts, ...)

├─> models # DTDL model files for the various entities (twins) of our environment

├── README.md # This README file :)

├─> ttn-to-adt-azurefn

│ └─> ttn-webhook # The serverless function (webhook) routing TTN uplink messages to ADT

└─> ttn_payload_formatters # The Javascript "codecs" for converting the payloads of the LoRaWAN sensors used in the demo

Steps

Setting up a LoRaWAN Network Server

The recommended approach is to follow the instructions in this repository, and to get your own network server, based on The Things Stack, up and running on Azure.

If you want to use The Things Network v2, or any other network server really, the instructions below will still apply, granted that you adapt them in order to:

-

decode the uplink packets using whichever mechanism your LNS exposes for enabling payload formatting/decoding.

-

configure your application server so that it makes HTTP POST calls to the Azure Functions webhook that we will be setting up later whenever uplink messages are being received.

Now, for sensor data to eventually flow all the way from The Things Stack to Azure Digital Twins, we need to start at the beginning, and get them sensors connected! In the following steps, you will provision your gateway, sensors, and LoRaWAN application in The Things Stack.

Provisioning a LoRaWAN gateway

The steps below are assuming that you’ve already gone through the steps needed to make sure that your Dragino LPS8 gateway (or equivalent) is already properly configured to connect to the Internet, either via Wi-Fi or an Ethernet cable.

-

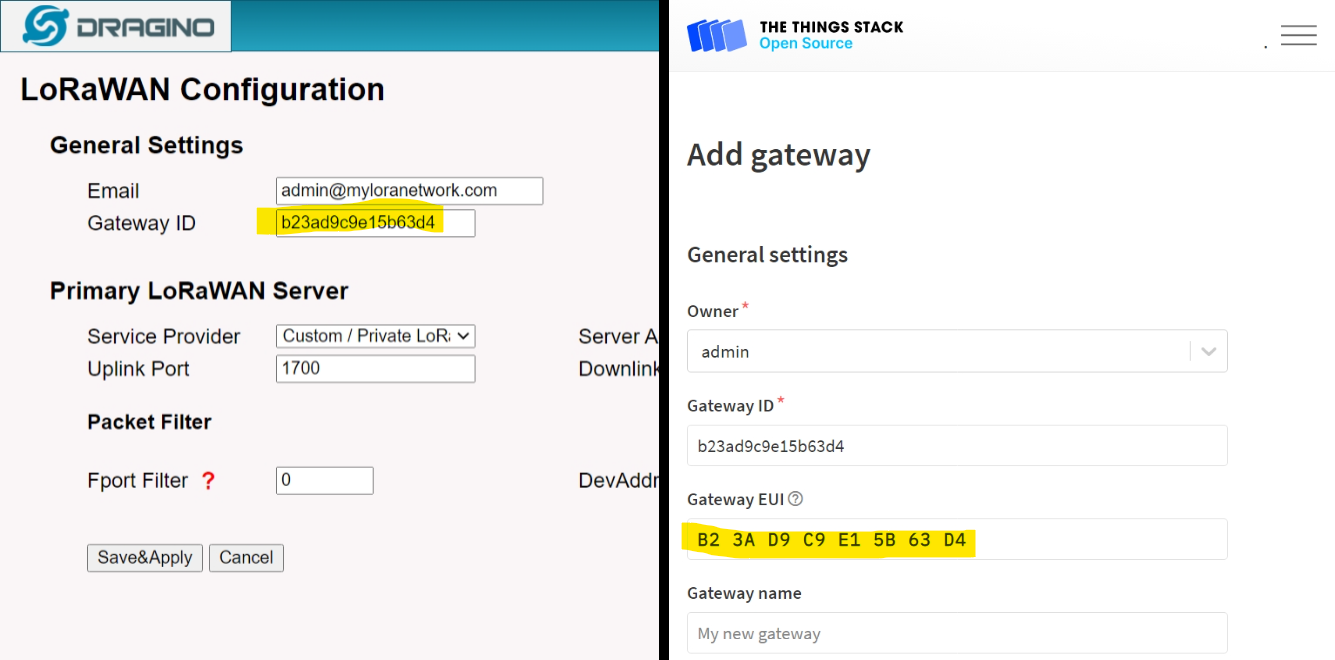

Follow the official instructions to register your gateway in The Things Stack.

Set the Gateway EUI to the same ID that can be found in the “LoRaWAN Configuration” page of your Dragino gateway admin console.

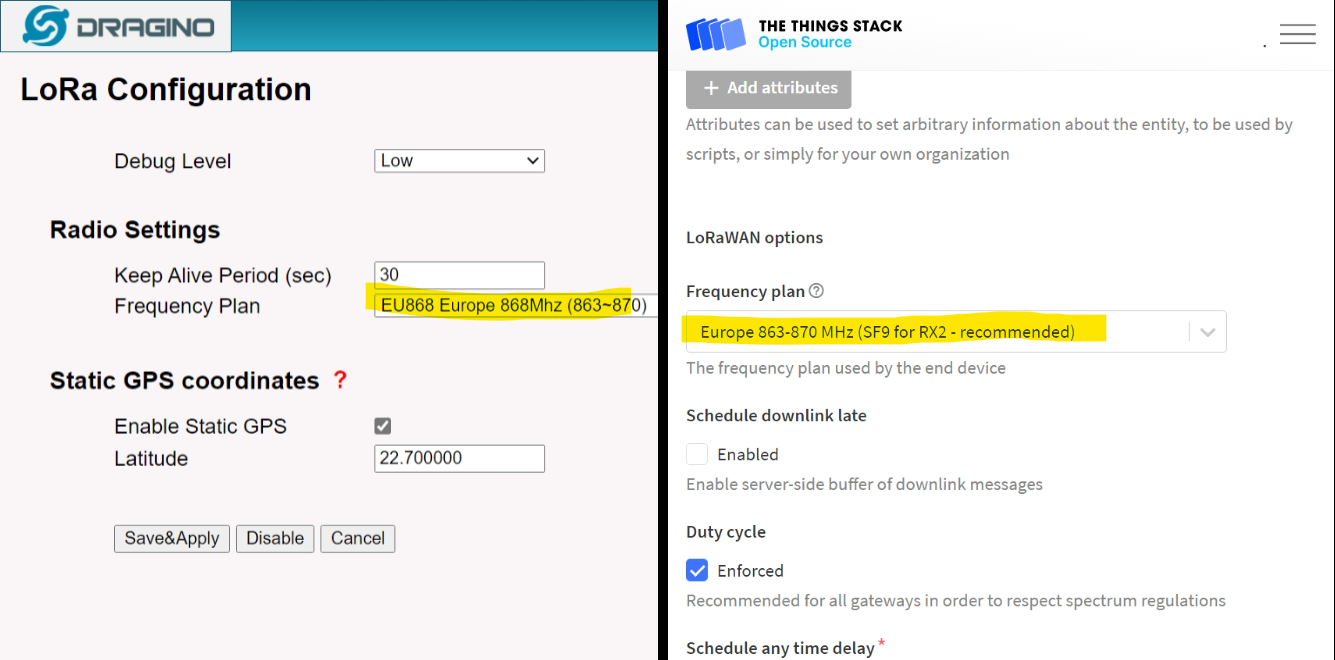

Pick the frequency plan corresponding to match your region and what your gateway is configured (see “LoRa Configuration” page)

-

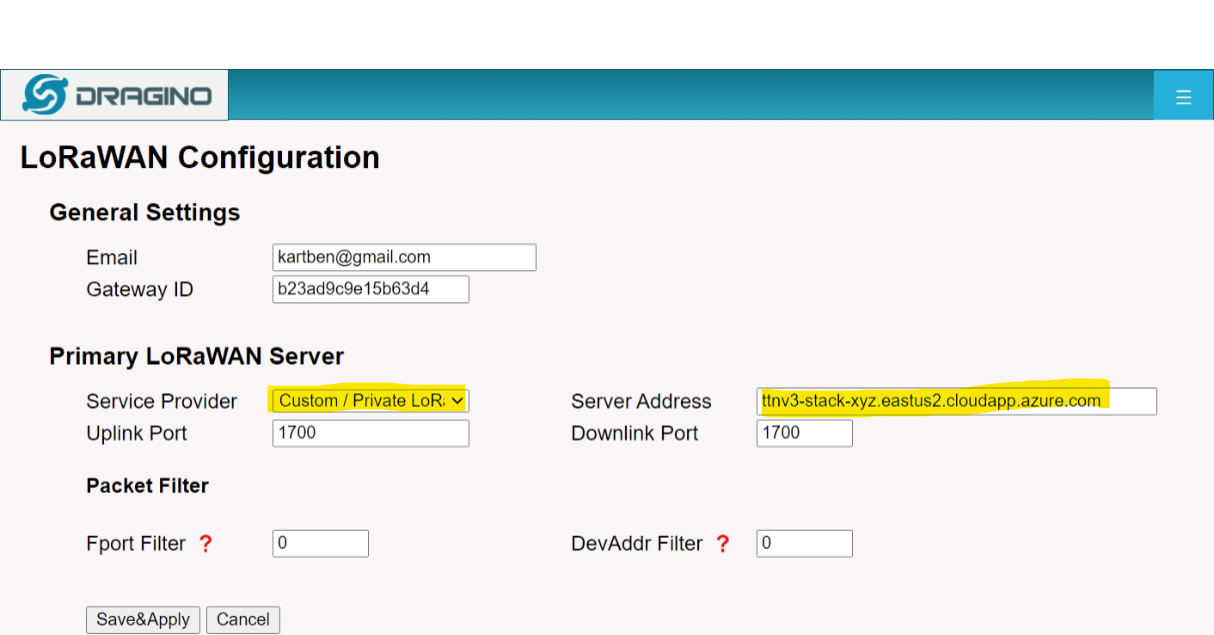

Once the gateway is created in your Things Stack instance, you can go back to the LoRaWAN Configuration of the gateway, and update the “LoRaWAN Server” in order to actually connect the gateway to your very own LNS.

- Set the Service Provider field to: “Custom / Private LoRaWAN”.

- Set the Server Address to the host name of your Things Stack server instance, ex.

ttnv3-stack-xyz.eastus2.cloudapp.azure.com.

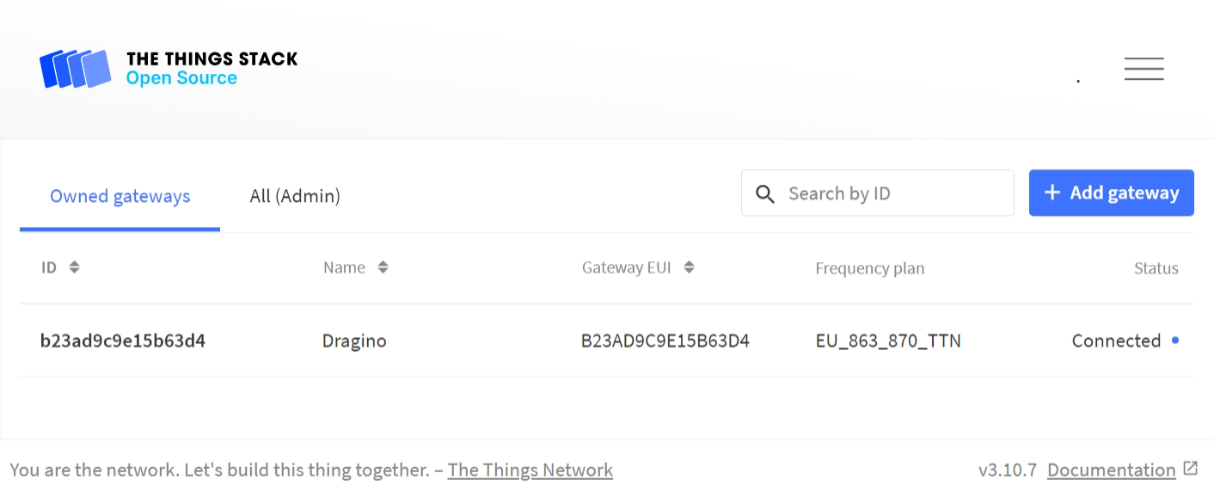

Save & Apply the settings, and The Things Stack should shortly start showing it as “Connected”!

Provisioning a LoRaWAN application + associated sensors

-

Follow the official instructions to register a new application for our smart building

-

Once the application is created, navigate to the “Devices” menu of your application, and once again use the official instructions to register the temperature sensors.

The LHT65 sensors support OTAA activation mode, and all the information needed to provision them should be provided on the sticker of the box your sensor came with.

-

Finally, we need to configure the payload formatter in order to unpack the binary/opaque payload that the temperature sensors are sending as uplink message.

Just head over to the Payload Formatters / Uplink section of your application in The Things Stack console, and use the following snippet as the Javascript formatter:

function decodeUplink(input) { var data = {}; var bytes = input.bytes; switch (input.fPort) { case 2: data = { $metadata: { $model: 'dtmi:com:dragino:lht65;2' }, //Battery,units:V batteryLevel: ((bytes[0]<<8 | bytes[1]) & 0x3FFF)/1000, //SHT20,temperature,units:C builtInTemperature:((bytes[2]<<24>>16 | bytes[3])/100), //SHT20,Humidity,units:% builtInHumidity:((bytes[4]<<8 | bytes[5])/10), //DS18B20,temperature,units:C temperature: { "1":((bytes[7]<<24>>16 | bytes[8])/100), }[bytes[6]&0xFF], } break; } return { data: data }; } -

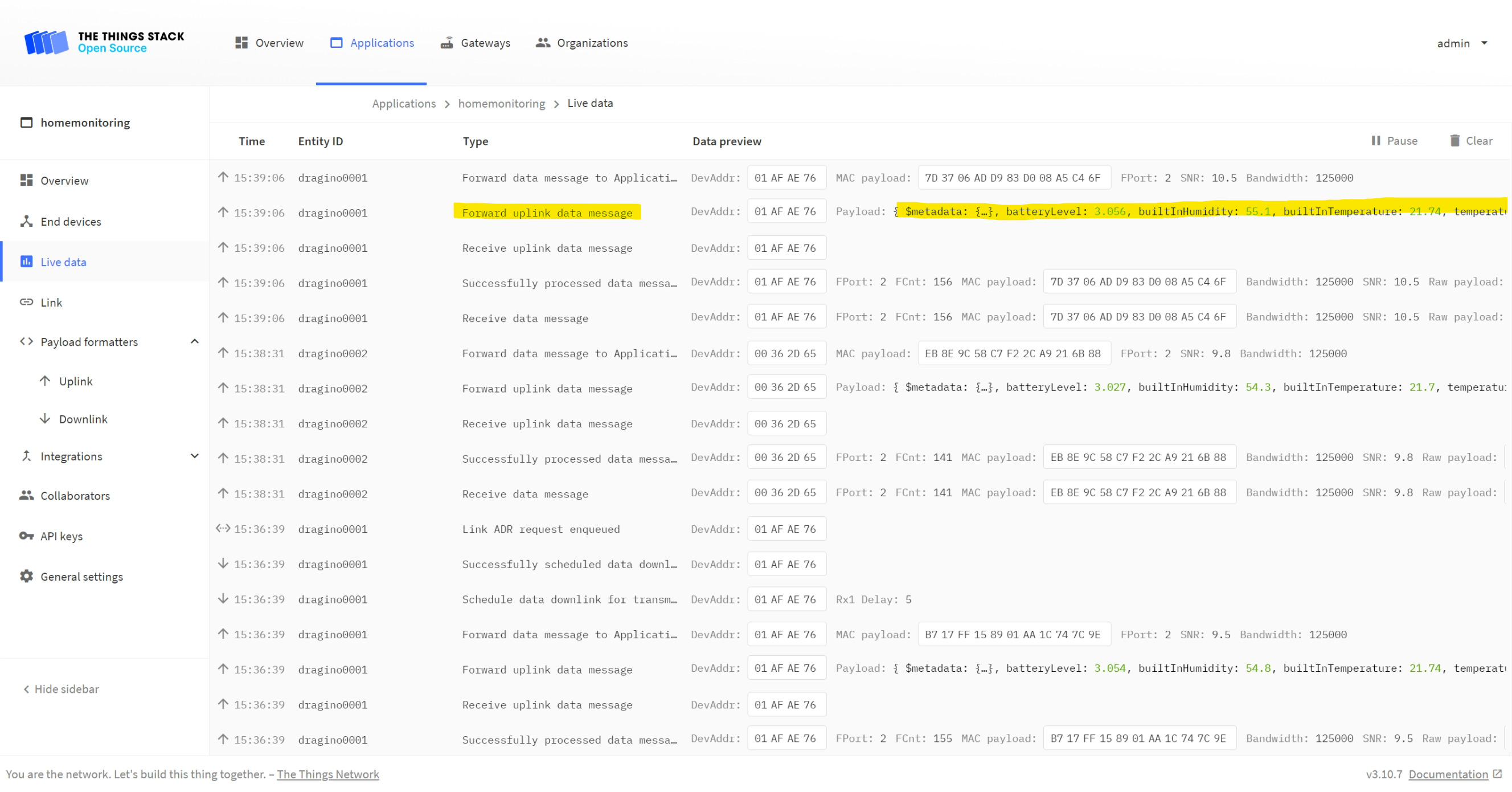

Make sure your sensor(s) are out of the deep sleep state they’re in if you just got them by pressing the “ACT” button for 5+ seconds. Your sensors should now start sending uplink messages every 20 minutes, and your application logs should confirm that payloads are being properly decoded.

Note: If you do not own a Dragino LHT-65 temperature sensor, or even an actual gateway, we still have you covered! The Things Stack allows you to simulate uplink messages, effectively allowing you to pretend that an actual device is sending data.

Just create a random device in your application (frequency plan, activation mode, etc. don’t really matter in this case), Then head over to the “Messaging” section of the device, and use any of the following payload examples (hex-encoded) to simulate uplink traffic:

cb f2 07 f5 01 c7 01 07 bd 7f ff

This corresponds to a battery level of 3.058V, a humidity level of 45.5%, temperature of 20.37℃, and temperature as reported by the external sensor of 19.81℃.

cb f2 07 f8 01 a1 01 07 bd 7f ff

This one corresponds to a battery level of 3.058V, a humidity level of 41.7%, temperature of 20.4℃, and temperature as reported by the external sensor of 19.81℃.

Now that our sensors are connected and that intelligible sensor data is flowing through our application server, we can setup the Azure Digital Twins instance where we will be describing how our sensors fit into a larger picture, one where we will be manipulating the digital twin of an entire building.

Setting up an Azure Digital Twins environment

-

Follow the install instructions from the Azure Digital Twins Documentation in order to setup a new Azure Digital Twins instance in your Azure subscription, and to configure the ADT Explorer tool to start interacting with it.

Note: In order to centralize all the Azure resources used for this tutorial, you will want to create the Azure Digital Twins instance in the same resource group as the one used for deploying The Things Stack earlier.

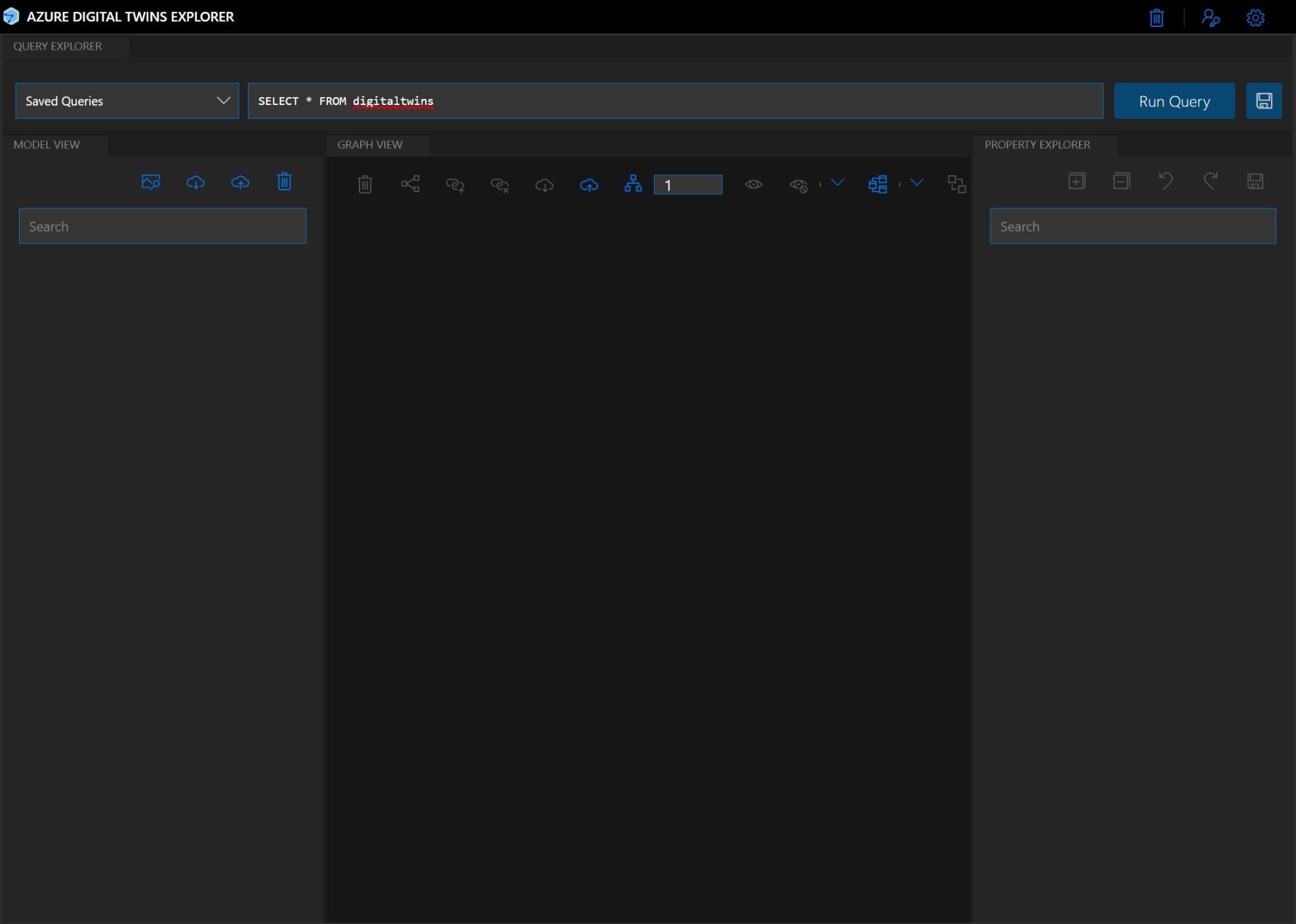

Once your ADT instance and ADT Explorer are fully setup, you should be able to just open your favorite web browser at http://localhost:3000, and start using the Explorer. The first time you open it, it will ask you for the URL of your ADT endpoint.

You can then try to run the default query, which should not throw any error—and return no twin whatsoever, as our instance is fresh off the press!

-

Use the ADT Explorer user interface (

icon in the Model View pane) to import the three JSON files contained in the

icon in the Model View pane) to import the three JSON files contained in the models/folder (building.json,room.json,Dragino LHT65.json) into your ADT instance.Optionally, you can also associate the PNG icon files found in the very same folder to each entity (

icon).

icon). -

The next step consists in creating the twin graph describing our smart building environment. For each of the following entities, use the

icon to create new entities:

icon to create new entities:building001, of type Building ;bedroom001, of type Room ;bedroom002, of type Room ;kitchen, of type Room ;bathroom, of type Room ;livingroom, of type Room.

Note: We are deliberately not creating the twins for our sensors manually, as they will be automatically be inserted in the graph once we have completed the integration between the LoRaWAN Network Server and ADT, later in the tutorial.

-

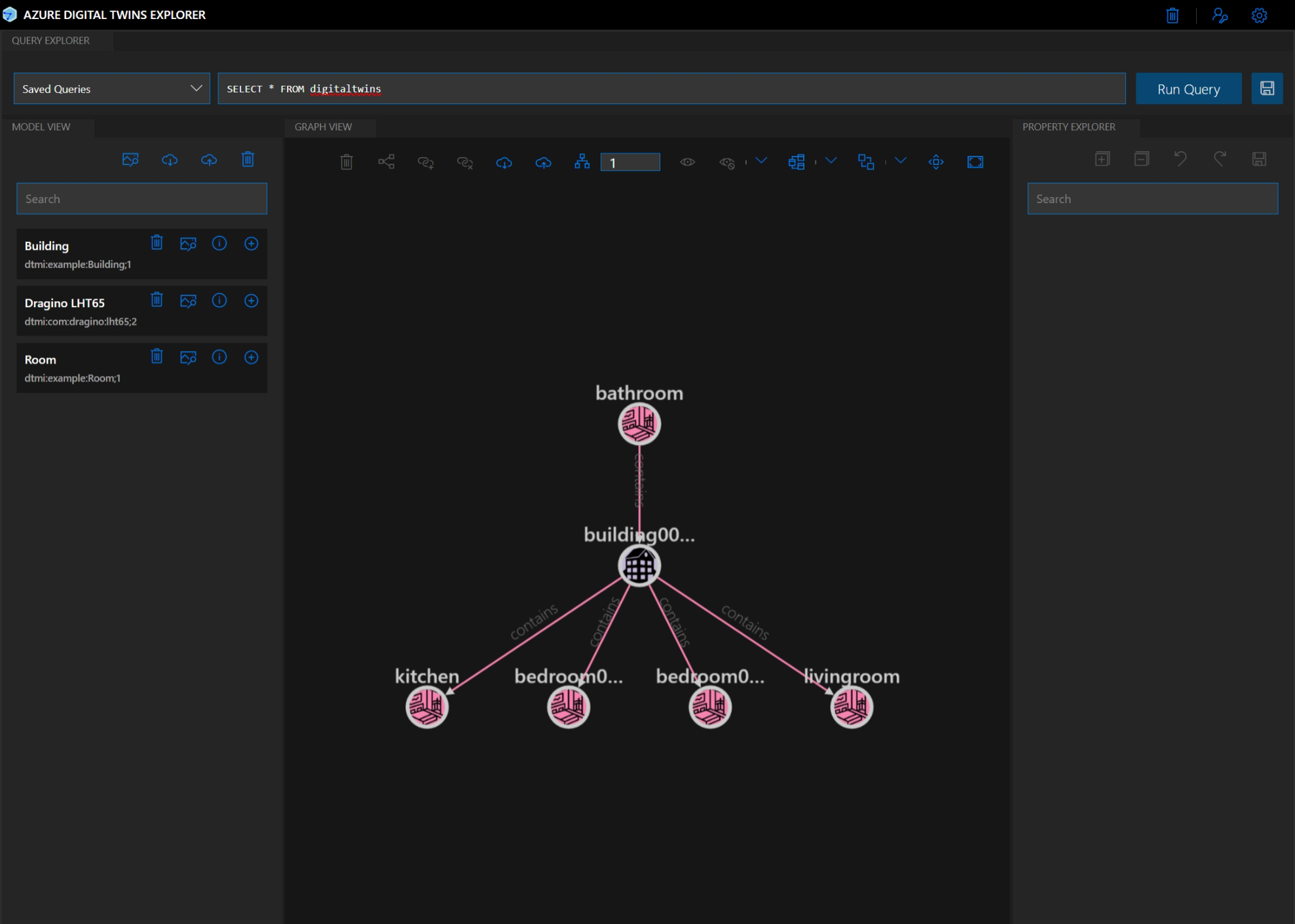

Finally, we need to attach the various rooms to the building we’ve created. Select

building001in the graph and, while maintaining the SHIFT (⇧) key pressed, selectbedroom001; you can now use the to create a

to create a containsrelationship between the two twins.Repeat this step for all the other rooms, in order to add them all to

building001. Your twin graph should now look like the following.

Bridging The Things Stack to Azure Digital Twins

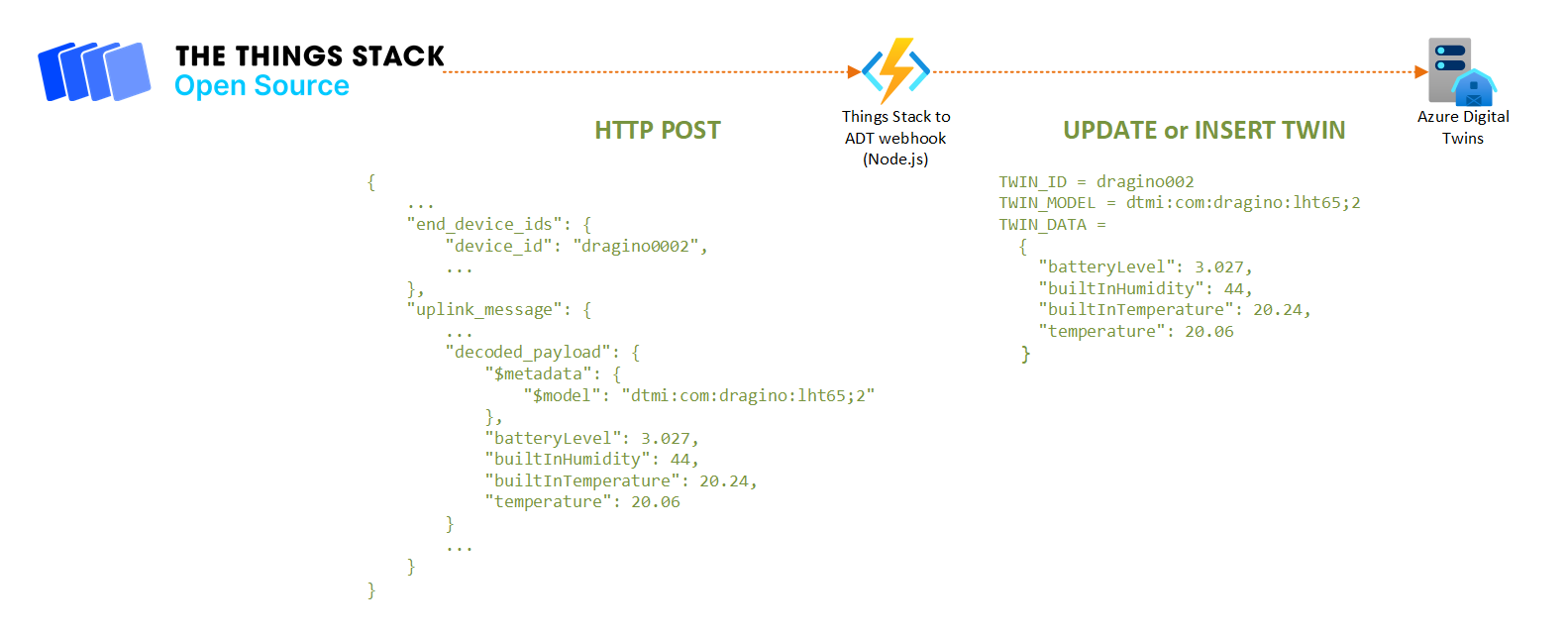

At this point, we have actual sensors connected to a LoRaWAN network and reporting temperature, humidity, and battery level at regular intervals. We also have a twin graph modeling, in a very simplified way, a building.

We now need to bridge the LoRaWAN application server to our Azure Digital Twins instance so that our sensors, together with the data they are reporting, appear in the twin graph. Since the payload formatter that we have configured for our application in The Things Stack console is already taking care of not only decoding the uplink binary payload into meaningful sensor values, but also setting a DTDL Model Identifier (DTMI), it shouldn’t be too difficult to update the twin graph each time an uplink hits the application server.

Let’s deploy a serverless function that will be called as a webhook from the Things Stack application server for each uplink.,It will take care of creating/updating the digital twin corresponding to the sensor associated to the uplink.

-

Follow the official instructions to deploy a Function App from the Azure Portal.

Note: If you are familiar with the VS Code extensions for Azure, feel free to do the following from VS Code rather than using the Azure Portal.

- Subscription: use the same subscription you used for the previous steps ;

- Resource Group: use the same resource group you used for the previous steps ;

- Function App Name: a name of your choice for the Function App instance ;

- Publish: select “Code” ;

- Runtime stack: Node.JS ;

- Version: 14 LTS ;

- Region: use the same region you used for the previous steps.

For “Hosting”, stick to the default options, i.e. create a new storage account, use Windows as the Operating System, and setup a Consumption plan.

-

Go to the “Settings / Configuration” section of the Function App, and give it a System Assigned Managed Identity: setting the “Status” toggle to “On”, and press “Save”.

After confirming your choice, wait a few seconds until the page refreshes itself and show the “Object ID”, i.e the unique identifier that will allow to grant the serverless function access to the Azure Digital Twins instance.

-

Without leaving the active page, you can directly click on “Azure role assignments”. There, add the following role assignment (“Add Role Assignment (Preview)”):

- Scope: Subscription ;

- Subscription: the subscription you’ve been using thus far ;

- Role: “Azure Digital Twins Data Owner”.

-

Go to the “Settings / Identity” section of the Function App, and add (“New Application Setting”) the following two application settings:

-

Name:

WEBSITE_RUN_FROM_PACKAGE// Value:https://raw.githubusercontent.com/kartben/lorawan-adt-demo/master/ttn-to-adt-azurefn/package.zip -

Name:

AZURE_DIGITALTWINS_URL// Value: The URL of your Azure Digital Twins Endpoint, ex.https://myadtinstance.api.eus2.digitaltwins.azure.net

Hit “Save”.

-

-

After a brief moment, go to the “Functions / Function” section of the Function App. A function called “

ttn-webhook” should now be visible, as the Function App has downloaded thepackage.zip. Click on it, and hit the “Get Function URL” button in order to get the URL of the endpoint where your newly deployed serverless function can be accessed. It should look similar tohttps://myfunctionapp.azurewebsites.net/api/ttn-webhook?code=Mmlyb2lwbHdlZmxrc2RqMjA5amtsZnNkZGtzbGo= -

Finally, in the “Integrations / Webhook” section of your application in The Things Stack console, add a new webhook:

- Select the “Custom Webhook” template ;

- Webhook ID: a unique ID of your choice ;

- Webhook format: JSON ;

- Base URL: the URL of your serverless function, as per the previous step ;

- Enabled messages: select the “Uplink message” checkbox.

Confirm the creation of the webhook by clicking “Add webhook”.

From now on, each time an uplink message is sent by an end node provisioned in this application, the webhook will be called. If you’re curious, you’ve already checked by now what it is actually doing: given a sensor ID, a decoded payload, and a digital twin model ID (DTMI), it performs an “upsert” in the ADT instance available at AZURE_DIGITALTWINS_URL. And voila!

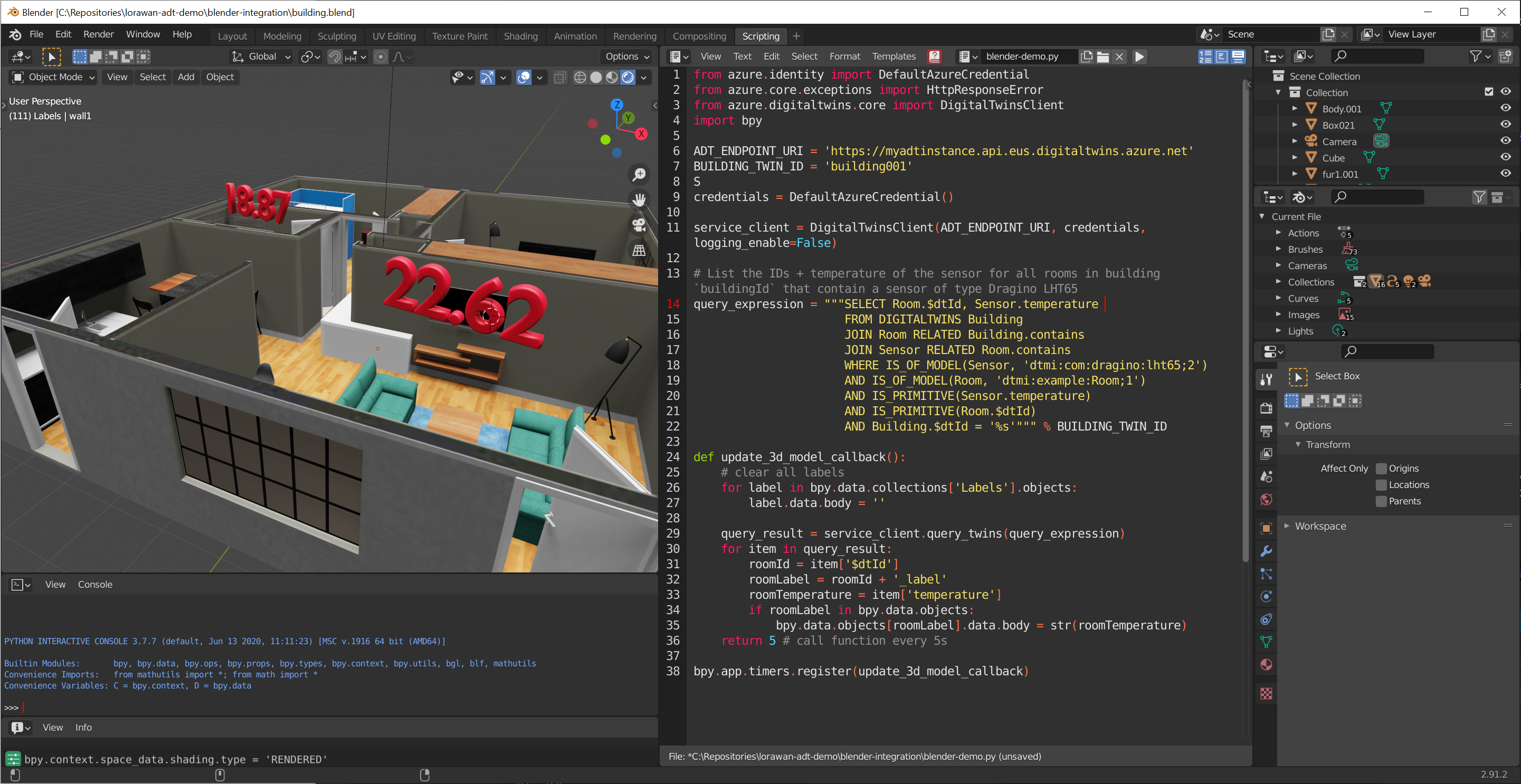

Real-time 3D visualization using Blender & Azure Digital Twins SDKs

Now that we have sensor data flowing into our Azure Digital Twins graph, we are going to look at how this graph can be queried from a 3rd party application, Blender in our case, in order to visualize and contextualize our digital twins.

-

From the

python\binfolder of your Blender install, run the following (requires admin privileges) commands to install the Azure Digital Twins Python SDK and its dependencies:./python -m pip install --upgrade pip ./python -m pip install azure-digitaltwins-core azure-identity -

Launch Blender and open the

blender-integration/building.blendfile. -

Update the following variables in the script:

- replace

<ADT_ENDPOINT_URI>with the actual URI of your ADT endpoint, ex.https://myadtinstance.api.eus2.digitaltwins.azure.net; - replace

<BUILDING_TWIN_ID>with the id you gave to the Building twin you created earlier, ex.building001.

- replace

-

Use the Alt+P shortcut or press the play button in the text editor’s toolbar in order to start executing the script.

After a few seconds—the UI might momentarily become unresponsive—, the 3D model should get updated, and any existing big 3D red temperature label should disappear. That’s expected behavior: if you look closely at the Digital Twin query (line 14), it is fetching all the rooms that contain a sensor. Since there is no room (yet!) meeting the criteria, the query returns an empty result ; therefore, besides lines 26-27 clearing up the labels, nothing else happens.

-

Use the Azure Digital Twins Explorer and re-run the default

SELECT * FROM digitaltwinsquery. You should now see something—that you probably expected if you’ve been paying attention: there are new twins for the Dragino LHT65 sensors.As the sensors have started to send uplink LoRaWAN messages, The Things Stack has picked those up, decoded them, and called the Azure Functions webhook that has created (and later updated) the digital twins corresponding to the sensors.

And that’s it, you should now see your 3D model update in near real-time (that is, every 5 seconds)! Whenever your sensors send new data, the twin graph gets updated, and the query picks up the updates to the twins. If the twin of a room happens to be connected to a sensor in the graph, the temperature of this room is automatically showing up above the room’s ceiling!

Going further

If you reached this point, and if you are interested in experimenting further with the concepts of digital twins, here’s a non-exhaustive lists of things you may want to consider:

- Implement a push mechanism in order to replace the aggressive and unnecessary polling which the Blender Python script is currently doing every 5 seconds. You could use SignalR to that effect, see the “Integrate Azure Digital Twins with Azure SignalR Service” section in the Azure Digital Twins documentation ;

- Extend the Blender script to not only update the 3D scene based on the digital twins’ state, like we are doing at the moment, but also to update the twins based on interactions happening with the 3D digital model (ex. flipping a “virtual” wall switch) ;

- Add additional data sources. You twin graph can be populated by virtually any source, including of course telemetry coming from devices connected to **Azure IoT Hub.

Author

👤 Benjamin Cabé

- Website: https://blog.benjamin-cabe.com

- Twitter: @kartben

- Github: @kartben

- LinkedIn: @benjamincabe

🤝 Contributing

Contributions, issues and feature requests are welcome!

Feel free to check issues page.

Show your support

Give a ⭐️ if this project helped you!

📝 License

Copyright © 2021 Benjamin Cabé.

This project is MIT licensed.

This README was generated with ❤️ by readme-md-generator